Neural Scaling Laws(NSL)

Diverse studies on emergent Neural Scaling Laws(NSL) of AI

Reconcileing Kaplan and Chinchilla Scaling laws

The Neural Scaling Law(NSL) [kaplan2020scaling, hoffmann2022training] refers to how language models scale with respect to key factors: model size, training data size, and computational resources. This scaling law is important to anlyze since it enable how “perofrmance gain” we can expect by scaling up the AI model and data. However, [kaplan2020scaling, hoffmann2022training] has empricially measured different scaling coefficients for lage langague model. In paper (Under review in TMLR) “Reconciling Kaplan and Chinchilla Scaling Laws” , we aim to identify the origin of such discrepancy through analytic methods and small langauge model traning.

Origin of Neural Scaling Laws

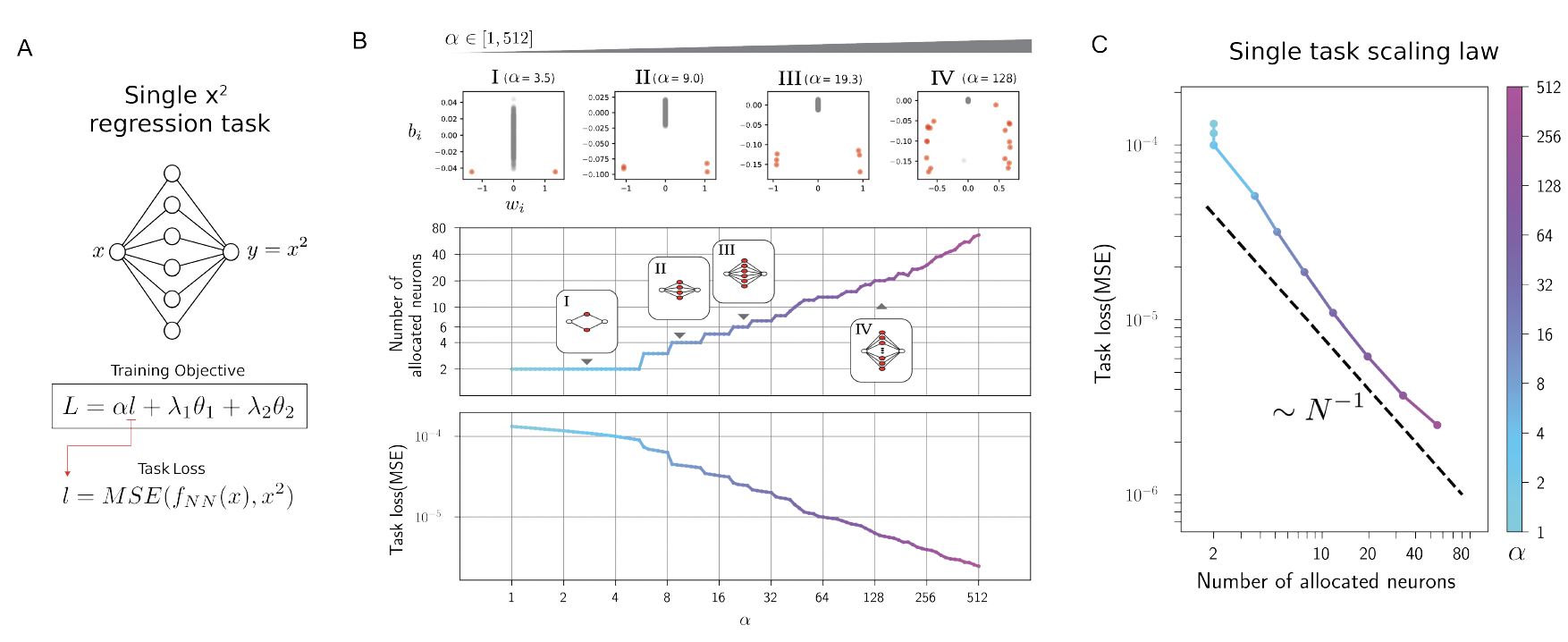

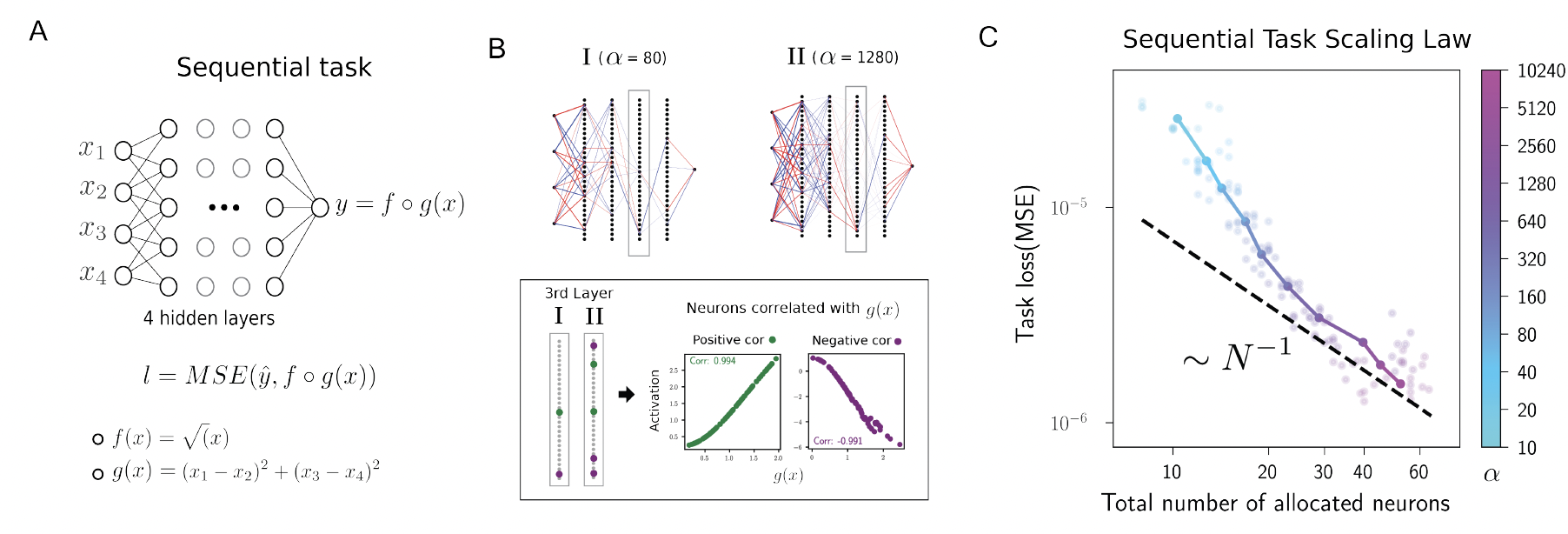

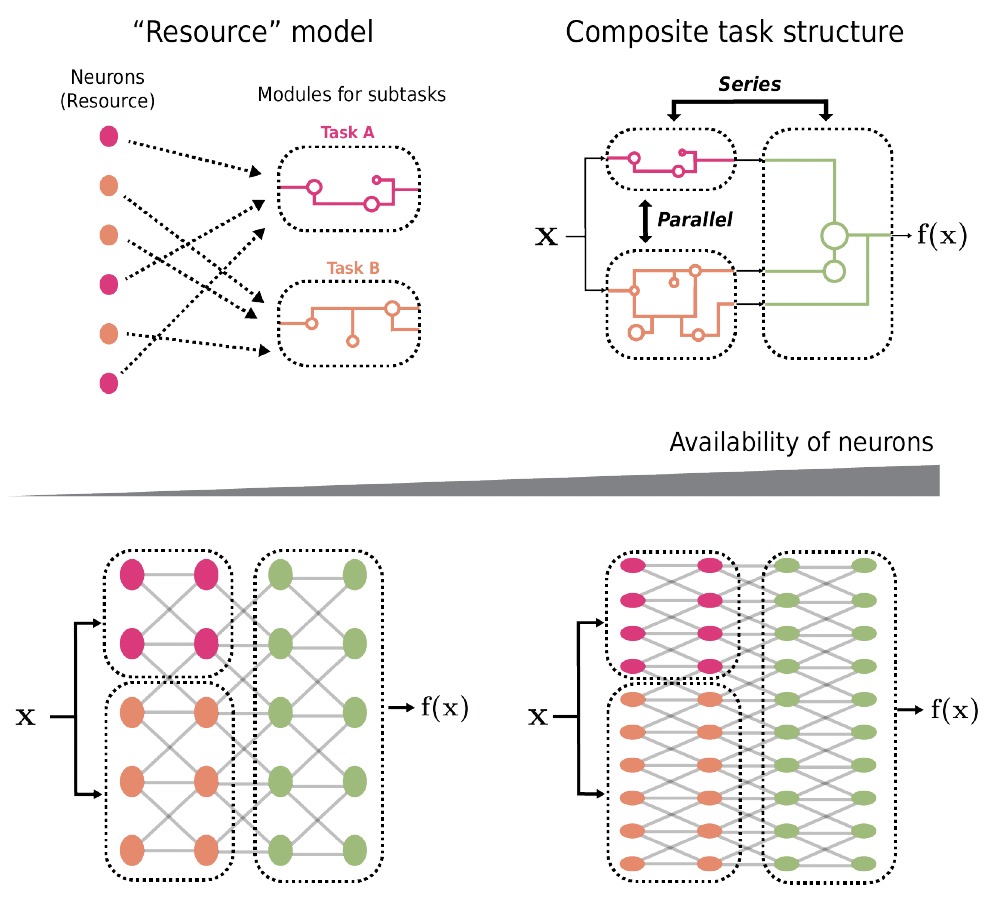

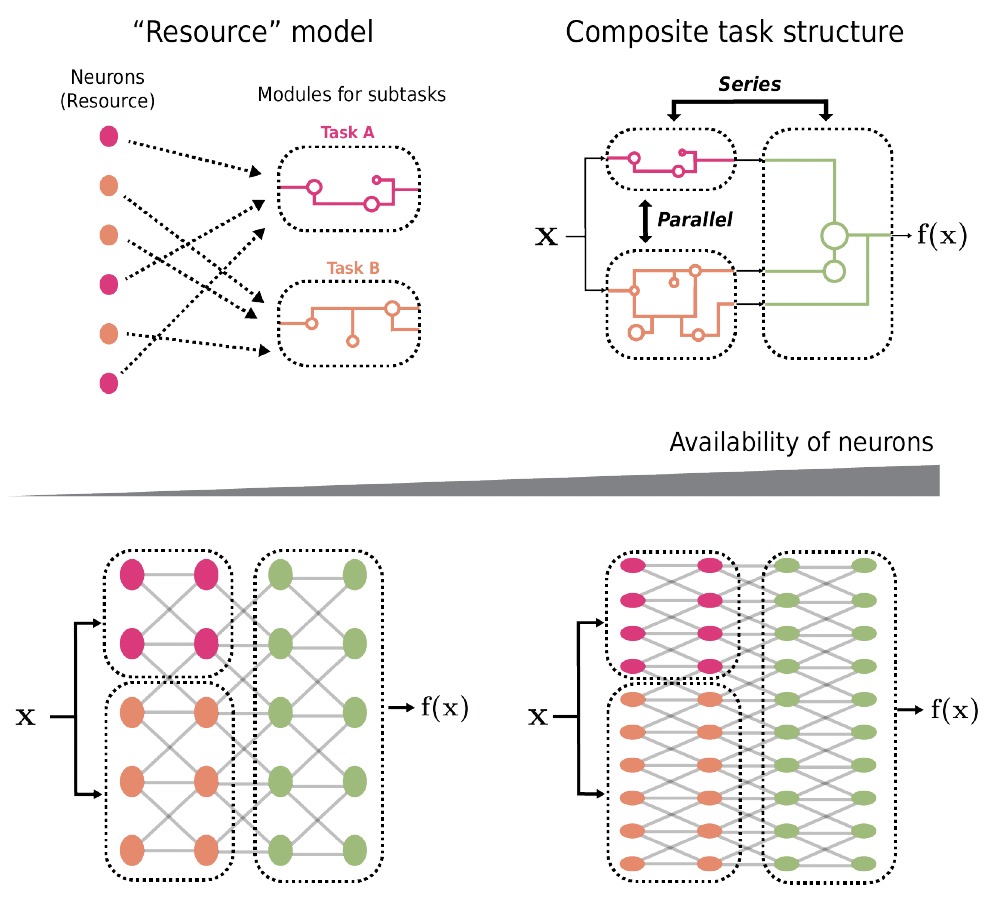

On the other hand, it is unknown why such a law occurs and holds across various tasks, including language, vision, and even proteins. Previously, in our workshop paper at ICLR 2024, “A RESOURCE MODEL FOR NEURAL SCALING LAWS”, we propose the “resource model” as a phenomenological model to explain the scaling laws of large language models (LLMs). Resource model hypotheses are as follows.

Based on the hypotheses of the Resource Model, we derive a (-1) scaling law for general composite tasks.

Belows are main figures for experiments(To be updated).