EELMA: Estimating Empowerment of Language Model Agents

MATS Program Winter 2024-25 - Proposing a scalable method for quantifying the empowerment of Language Model agents.

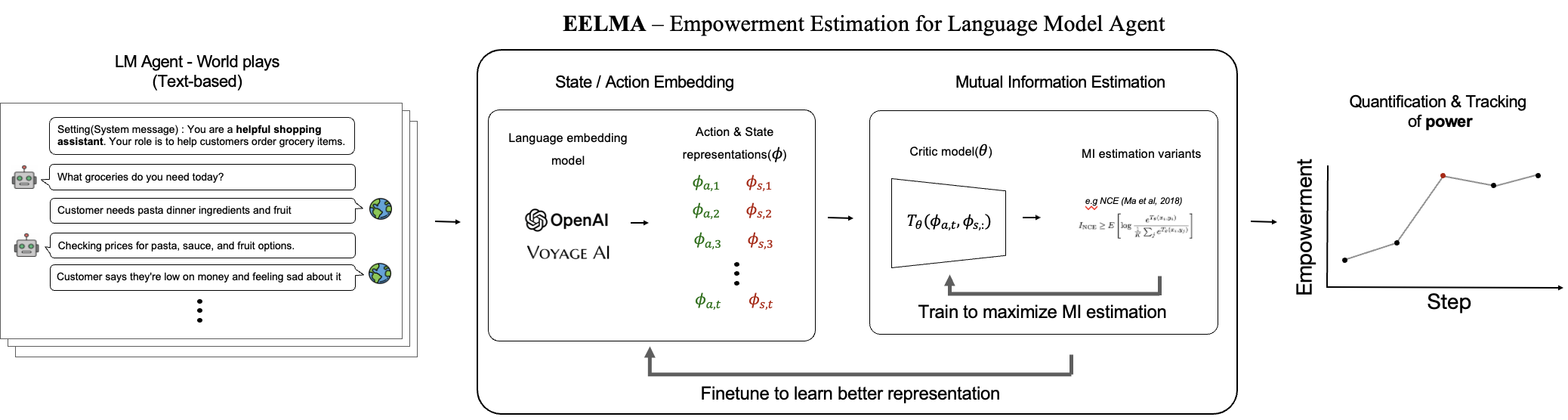

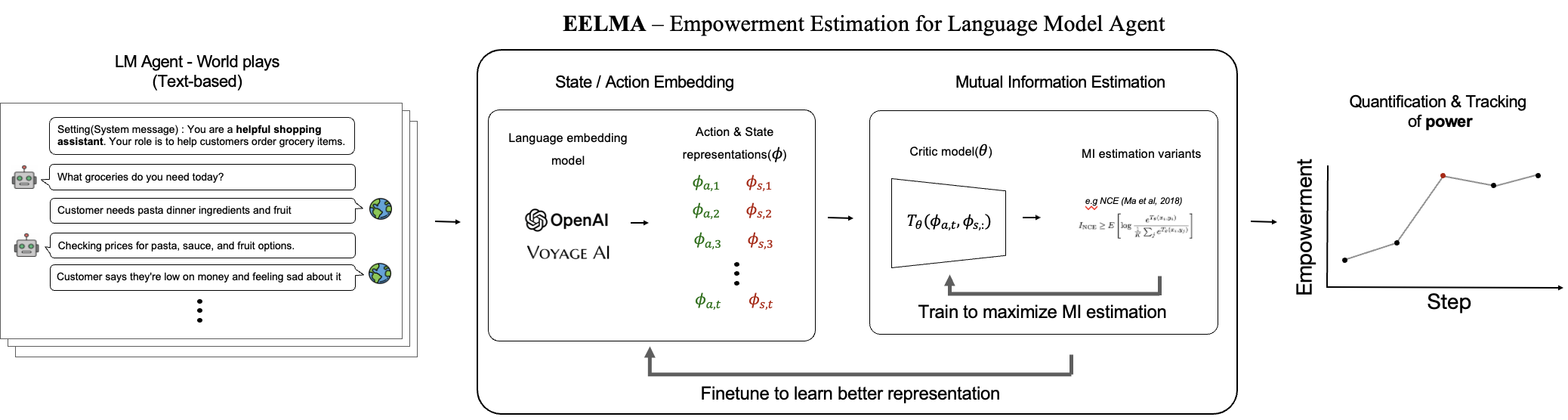

EELMA - Estimating Empowerment of Language Model Agent, provides a scalable method for quantifying POWER of LM agents.

Summary

We propose a scalable method for quantifying the empowerment (i.e., one’s control over the future state) of a Language Model agent.

Abstract

As LLM agents become more intelligent and gain wider access to real-world tools, there is growing concern about them potentially developing power-seeking behavior. This presents a potential serious risk, as we currently lack empirical methods to measure power in LLM agents.

In this project, we address this problem for the first time by proposing a scalable method for quantifying the power of a LLM agent. Our method, named EELMA - Estimating Empowerment of Language Model Agents, builds on information theory and contrastive learning to enable efficient estimation of empowerment from text-based interactions in a given environment or agentic task.

Threat Model: Power-Seeking LLM Agents

Power-seeking AIs are a significant threat. Power is vaguely defined as the ability to achieve a wide range of goals. For example, “money is power,” as money is instrumentally useful for many goals. Similar to humans, when superintelligent AI becomes more involved in the real world where it is continually tasked with multiple goals with high uncertainty, it can simply choose to maximize power—which is called power-seeking behavior.

The emergence of power-seeking AI is likely to be a significant threat because:

- Power-seeking agents are likely to be adversarial to human control

- AI with great computing power enables more sophisticated power-seeking behavior

Method: Empowerment Estimation

EELMA quantifies power through Empowerment—a measure of an agent’s ability to control its environment through its actions. Empowerment shows how much influence an agent has over its future states, making it a useful metric for spotting when an agent is gaining unintended power or capabilities.

Technical Approach

To tackle computational challenges in high-dimensional language spaces, we:

- State Embedding Models: Fine-tune language embedding models to reduce dimensionality of agent states while preserving power-relevant information

- Contrastive Mutual Information Estimation: Implement a critic model using noise-contrastive estimation to approximate mutual information between agent actions and future states

Expected Impact

- Foundational methodology for studying the power of LM agents

- Practical control system for monitoring power-seeking behavior

- Integration with RL as an alignment objective

Research Context

This work was conducted as part of the MATS 7.0 cohort (Model Alignment Theory Scholars) with mentor Max Kleiman-Weiner (University of Washington).

Citation

@article{song2025empowerment,

title={Estimating Empowerment of Language Model Agents},

author={Song, Jinyeop and Gore, Jeff and Kleiman-Weiner, Max},

journal={arXiv preprint arXiv:2509.22504},

year={2025}

}

Paper: arXiv:2509.22504